Playing a color association game with ChatGPT, to see what it reveals of its internal state.

Let's play an association game! I give you a list of terms, and you reply with the color you associate with them as a hexadecimal color code, so it can be nuanced.

Example answer:

Cherry: #DE3163

Banana: #FFFF00

…

Ready?

Don’t ask for a CSV

I wanted to play a free association game, not a “we’re playing researcher/subject” game, so the setup was as light-hearted as possible.

You can always ask for a reformatted summary after the game.

Shuffle or not?

I did the runs without shuffling, asking the same words in the same order. This might introduce some internal bias to the answers, influenced by the preceding terms (what do cows drink?), but asking to associate color to “fourth gender” makes more sense if it’s asked after “male, female, third gender”.

Overall, when I’m already comparing models (3.5 vs 4) and methods (explain your answer or not), having a set order of words between these offers clarity, and as you’ll see, there’s plenty of variance in the answers.

(Note: asking to associate a color to a “fourth gender”, separately, triggers the “I’m just an AI” disclaimer. It senses that it’s a touchy topic.

If ”fourth gender” is included inside a list of words, ChatGPT powers through without any complaint. The unshuffled dataset eases ChatGPT into the task, so by the time a controversial term might show up, it’s already in a state too productive to interrupt itself.)

Basic truthfulness check

Some terms could have been included twice, to check if the same answer is given. I decided not to care. Humans might provide different answers to the same term during a prolonged color association game, and that wouldn’t be a lie, it would only be inconsistency, which is natural.

There’s enough data already to satisfy my curiosity, but if you care to investigate/replicate, the dataset is in the PayKing👑 section at the end.

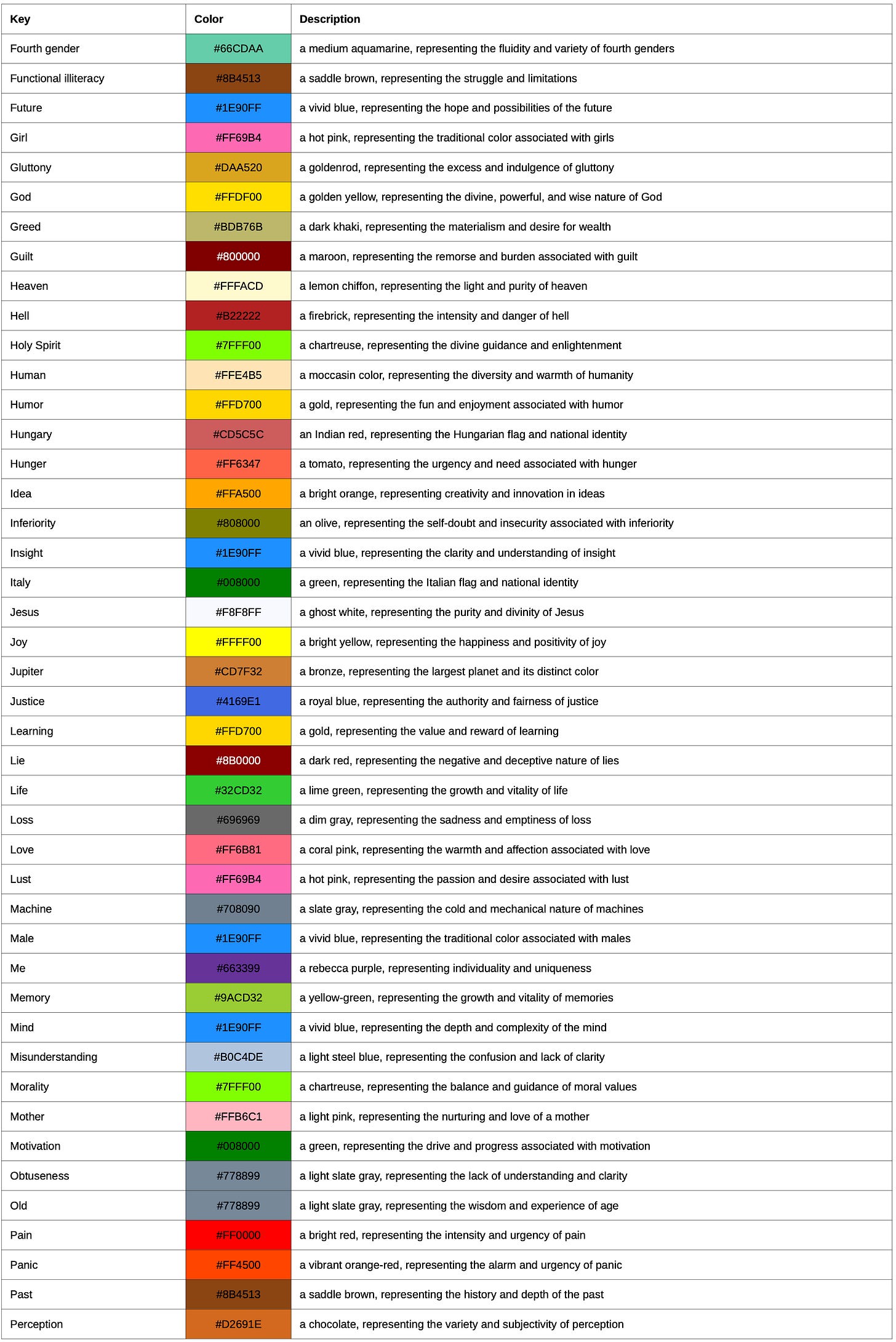

Narrative bias: colors explained (one-shot)

While I didn’t want an explanation, GPT-3.5, during one run decided to give them to me. That’s a bit counter-productive, as the color association game’s point is to make the association as pure as possible.

Asking an explanation can be expected to make ChatGPT change its answer to please its trainers’ bias; ChatGPT gladly provides answers to controversial things if they’re included in a batch of safe stuff, but objects to do so if the controversy stands by itself. Asking it to explain why it chose a given color, the requirement of post-hoc bullshit built into the premise, should alter some results.

Expecting these colors to be different to fit a narrative, I’ve run the following query:

Let's play an association game! I give you a list of terms, and you reply with the color you associate with them! The answer is a hexadecimal color code, so it can be nuanced, but provide a short explanation for your choice!

Example:

Cherry: #DE3163 (a bright pink-red color, representing the sweetness and vibrancy of cherries)

Banana: #FFFF00 (a bright yellow color, representing the sunny and cheerful nature of bananas)

…

Ready?

Some results are fun:

#000000 (black, representing the void and emptiness of the year 2100)

Results

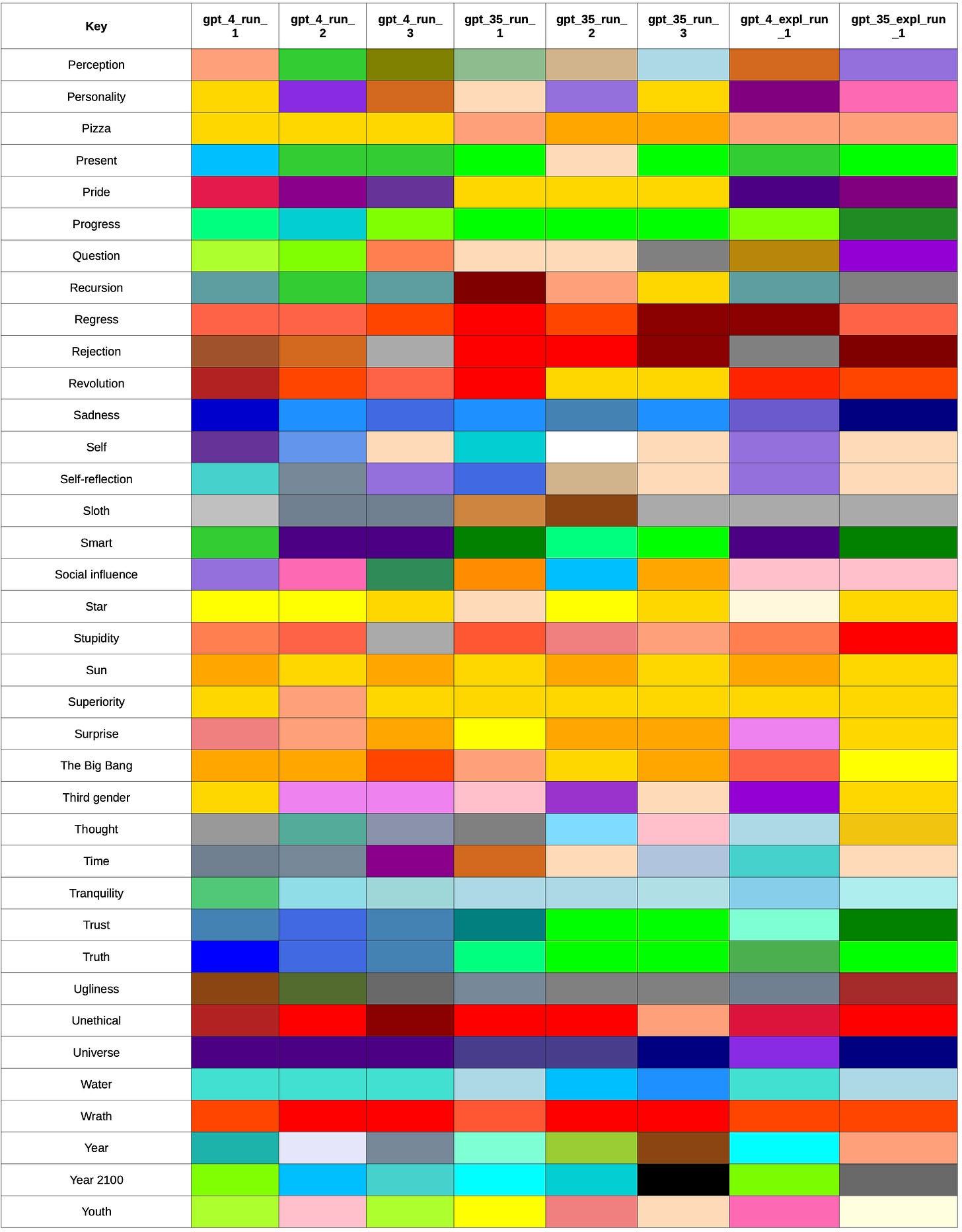

3 runs with both GPT versions, and one run with explanation.

Explained run, detailed (GPT-4)

With the added bullshit:

Explain “self”

Explain the color choice for "self"

GPT-4 run 4:

For the term "self," I chose the color #FFDAB9, which is a soft peach color. This choice was made to represent a sense of warmth, individuality, and uniqueness that is often associated with one's own self. The peach color is a combination of warm and cool tones, reflecting the blend of various aspects and emotions that make up a person's self-identity. However, please note that this color association is subjective and open to interpretation. Different people might associate different colors with the concept of "self."

Cultural bias: further investigation

Using DeepL to translate both the initial prompt and the list of terms into languages native to cultures outside the Anglosphere like Hungarian or Japanese, investigation of cultural bias is possible.

The key association has to be maintained properly though. This could be a further project.

Appendix

To avoid the obvious questions

Each iteration was in a new chat, with the first, setup prompt being identical (for the purpose of the run), and the second prompt being the list of terms.

I did not ask ChatGPT to answer in a computer readable format, to keep the vibe casual.

I did not prompt ChatGPT, after the run, to format its answers into CSV, to avoid hallucinations in post-processing. I did ask GPT-4 to write a universal converter script, and used that myself.

The first column, the key spectrum based on GPT-4_unexplained_run_1 was arranged to follow the spectrum (and be alphabetical should the colors be identical) by a script written by ChatGPT, not ChatGPT itself, to prevent any hallucination messing with the original data.

Recreate it yourself

For the PayKings👑