Singularity: we have all the loops

Breakthroughs in the past months gave us all the technology to make The Singularity happen. On top of everything that has changed everything so far, self-reflection changes everything, yet again.

A month ago, we’ve already had the hardware. Now we have the software. Everything is given for The Singularity to happen.

The Singularity, as it relates to AGI (Artificial General Intelligence), refers to a hypothetical point in the future when the rapid advancement of artificial intelligence leads to the creation of a machine or system with cognitive capabilities surpassing human intelligence. This unprecedented level of intelligence would enable the AGI to recursively self-improve its own design and functionality, resulting in an exponential acceleration of technological progress. The Singularity is often associated with profound implications for humanity, as it raises concerns and debates around potential risks, ethics, and the role of humans in a world dominated by superintelligent machines.

This is GPT-4’s summary, but it’s a bit wonky: AGI is not required for The Singularity. Only a sufficiently capable AI is needed that has the ability to improve itself, which could eventually lead to AGI.

You are correct. The Singularity doesn't necessarily require AGI, but rather a sufficiently capable AI that can improve itself. This self-improving AI would need to possess the ability to optimize its own algorithms and architecture to achieve better performance, leading to a recursive loop of self-enhancement. As the AI continues to improve itself, it could reach a tipping point where its intelligence rapidly surpasses human capabilities, resulting in the Singularity.

So do we have the capabilities for AI self-improvement?

As of early April, 2023, we do.

From an interview earlier this year from AI “godfather” Geoffrey Hinton at 35:47:

Journo: Are we close to the computers coming up with their own ideas on how to improve themselves?

Grandmaster G.H.: Uhm, yes, we might be

Journo: And then it could just go 📈

Grandmaster G.H.: That’s an issue, right

This interview was recorded on March 1st, 2023. By the pace of the AI development (discoveries) this year, March 1st was a decade ago.

The key improvement over existing neural network architectures of the Transformer (2017) was unsupervised learning: just feed it crap, and it will get better. Now we have every tool (method, play) to make the next step, unsupervised self-improvement, to kick off.

The final step.

Hardware requirements are dropping

As I’ve covered on March 15, we can now run powerful cognitive pipelines on cheap hardware.

Even OpenAI seems to be off the path of pursuing some mammoth model, GPT-4, despite being a lot more capable than GPT-3.5, seems to be the result of a smarter approach.

My wild guess: GPT-4 might only need an 80 GB Nvidia H100. It does inference slower, but it’s also cheaper to run than GPT-3.5, as it only runs on a single GPU. Probably quantized to lower precision for deployment, to fit inside the RAM.

GPT-4’s training was finished after the Chinchilla paper (April, 2022), when it became evident to everyone that size is less important and more training (with a lot more tokens) gives you the most bang for your compute resources (all known models at the time were severely under-trained).

Since mid-March, things in the lower-end also kept improving. Alpaca now has a competitor, also from around Stanford, Vicuna.

We introduce Vicuna-13B, an open-source chatbot trained by fine-tuning LLaMA on user-shared conversations collected from ShareGPT. Preliminary evaluation using GPT-4 as a judge shows Vicuna-13B achieves more than 90% quality of OpenAI ChatGPT and Google Bard while outperforming other models like LLaMA and Stanford Alpaca in more than 90% of cases. The cost of training Vicuna-13B is around $300.

Yes, this, just like Alpaca, could also run on your laptop. The key difference compared to Alpaca is the fine-tuning data, which was a corpus of 70000 “user-shared conversations“.

Who knew that IRC logs from 2004 might be worth a lot one day?

Is Vicuna better than Alpaca? GPT-4 says so:

And things are getting even cheaper. One example is LoRA:

LoRA stands for Low-Rank Adaptation, a mathematical technique to reduce the number of parameters that are trained. You can think of it like creating a diff of the model, instead of saving the whole thing.

Combined with LLaMA, it allows an even cheaper — and more democratic — recreation of Alpaca or Vicuna.

And for those who think LLaMA-7B is way too big and costly to fine-tune, here’s this hack (March 28):

LLaMA-Adapter: In contrast to LLaMA-Alpaca, it's not finetuning the whole model end-to-end. Instead, the Adapter-approach adds a small number of 1.2M parameters on top of a pre-trained, frozen 7B LLaMA model

The rapid advances in cramming more and more capability into smaller models, and achieving results with less and less computing resources through clever hacks suggest that we can expect an even shorter development/research/prototyping cycle, throughout the field.

For a self-improving AI, you need a loop where changes can happen without a human, in a way that it produces better and better results. A loop that’s smarter, more directed than a brute force evolutionary algorithm, the round trip for that seems infeasible for now, even with clever, resource-saving hacks. Giving Darwin the wheel would get you to AGI, but it might take a few billion years. A smarter driver is needed, an engineer.

For a breakthrough in this regard, the Big Boy, GPT-4 will deliver the goods.

Describing Alpaca, I’ve already touched on self-instruct (paper from December 20, 2022) where GPT-3 could generate instructions that, once fine-tuned on, would make GPT-3 better at following instructions.

Not quite the self-improving ability to open the way to The Singularity, but certainly a sign that with minimal human supervision we’re almost already there. With GPT-41, this loop got a lot tighter, faster, smarter and thus sustainable:

Self-reflection: an emergent capability

As I covered in my last post, with techniques like ReAct2, research got a lot less empirical, and more strategic, a huge time saver on its own.

The ReAct loop is just one of the meta cognition strategies that were recently discovered, which can improve the performance of an existing LLM by merely prompting it in a different, smarter way.

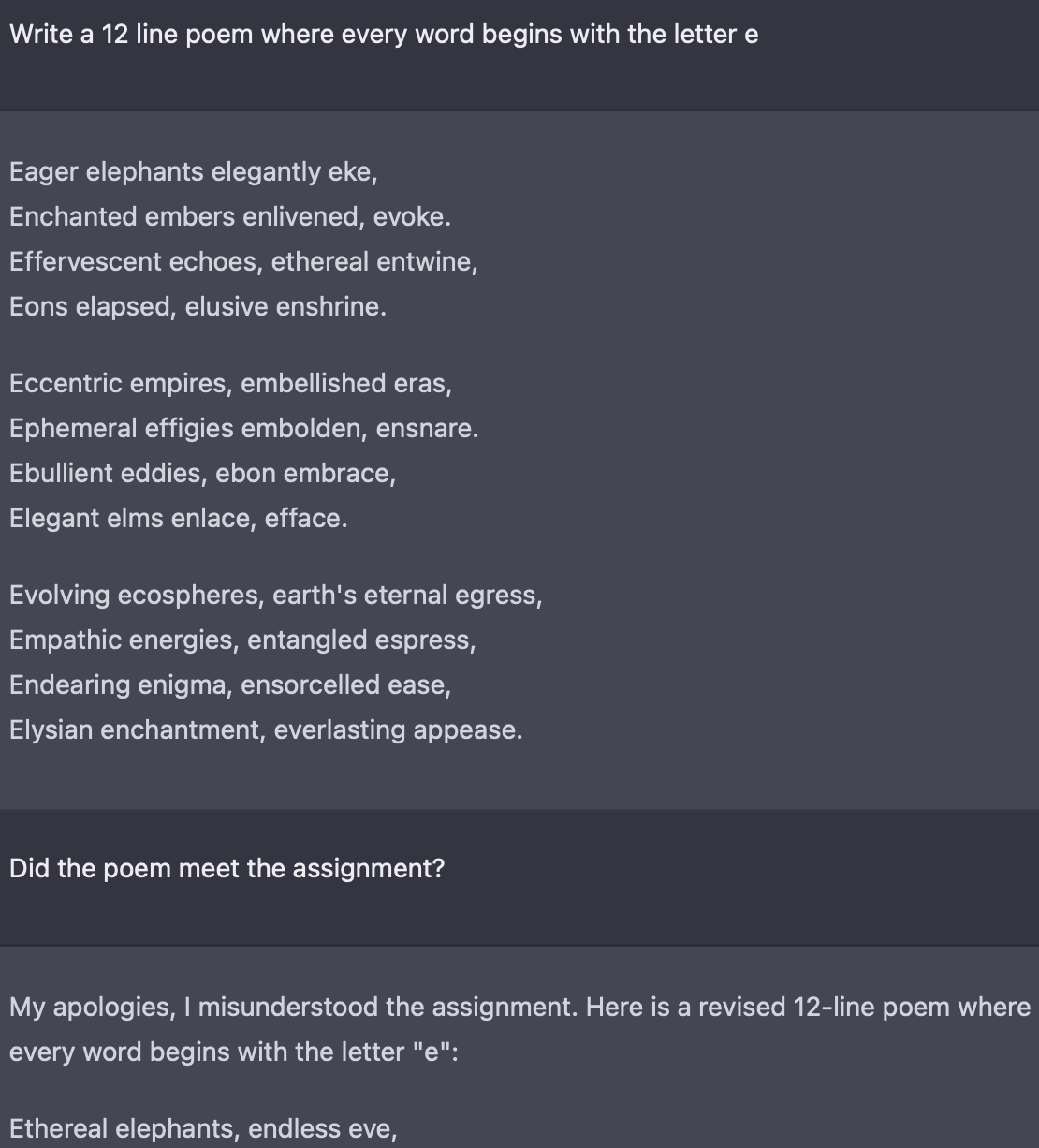

Asking ChatGPT-4 to write a poem where each word begins with the letter “e” might not be successful on the first try. However, asking it to reflect on its mistake it will improve its second try, to the point that this technique (once combined with ReAct, per the paper) (March 20) makes GPT-4 achieve better scores in benchmarks.

Now it’s not always correct in its self-evaluation, but, unlike GPT-3.5 (and many humans) that just declares “yeah, I did a great job” when asked to reflect on their own work, asking GPT-4 to do so, overall, results in higher quality answers. The hit rate is above a tossup.

Self-reflection is an emergent capability: GPT-3.5 didn’t have it, GPT-4 has it.

Note that this technique requires no supervision: even if GPT-4 is correct in its first answer, automatically asking it again to reflect and judge will result in an overall better answer. It doesn’t need human supervision, just a blind command to reflect after the initial answer. (We’ve doubled the inference costs by asking twice, but it’s way cheaper and scalable than introducing humans to do supervision through RLHF or any other, manual method. Add these improved answers to a — now classic — instruction training dataset and fine-tune GPT-4 with it. Or LLaMA.)

The criticism can be more constructive though, by being issued to GPT-4 by GPT-4: DERA (Dialog-Enabled Resolving Agents) (March 30) manages to improve answers by making GPT-4 play a split personality, where it has its own supervisor issuing critical observations for improvement:

We frame our dialog as a discussion between two agent types – a Researcher, who processes information and identifies crucial problem components, and a Decider, who has the autonomy to integrate the Researcher’s information and makes judgments on the final output.

(Note: two instances of GPT-4, even if they’re initialized in different roles, are “running” on the same, static model, so when they’re taking part in the same conversation with each other, it’s GPT-4 talking to itself, and thus, self-reflection).

And yet another paper exploiting the self-reflection capability (March 30):

In this work, we show that a pre-trained large language model (LLM) agent can execute computer tasks guided by natural language using a simple prompting scheme where the agent Recursively Criticizes and Improves its output (RCI)

Self-reflection: the next phase transition?

Jon Stokes in April 3, 2021 (Why I've grown less skeptical about artificial general intelligence) described a class distinction in the quality of output between smaller, less complex LLMs and their larger successors as something similar to a phase transition:

Sometimes, when a neural network is scaled past some size threshold (measured in the amount of computing resources and volume of training data), our human assessment of the quality of its output can change in some fundamental ways.

It’s not even just the output that’s different — the questions, answers, problems, and processes all undergo a shift, and according to one researcher I’ve spoken with, it’s almost like big models are a different field of study than everything smaller.

GPT-4 might represent something similar compared to its predecessor, a phase transition, signified by the emergence of self-reflection, so far the most shocking new capability.

No matter how secretive OpenAI is, it’s clear that self-reflection is not something that they consciously engineered into GPT-4; it’s just something that, due to the increase in complexity, quality, size, emerged as a feature from the same architecture that GPT-4 shares with GPT-3.5 (2022), GPT-3 (2020) and GPT-2 (2019).

Who knows what other capabilities of GPT-4 will be discovered later? Nobody, not even its owners.

Conclusion

With the Transformer architecture, LLMs can be trained without supervision.

Thanks to the hobbyist space, consumer LLMs seem to be able to do more and more with less and less resources. Efficiency is improving, and all the discoveries made on this level (where the experiments are smaller, more numerous, happen faster and cheaper, and the results are open source) can readily be applied to large LLMs.

With ReAct, LLMs can gain capabilities outside of their generative scope: using math libraries, searching the web, finding new material, execute code. Train themselves.

With GPT-4, LLMs are shown to be able to become self-reflective.

With ReAct, LLMs can reveal their chain of reasoning. With self-reflection, they can analyze and improve their own reasoning. Add new ReAct capabilities to the existing ones. Benchmark themselves.

Combine any new hack with an existing one, they surface daily, everything will just reinforce what I see as evident: with self-reflection added on top of the recent advancements of the past 3 months, as of April 5, 2023, all the capabilities required for self-improving artificial intelligence exists.3

The Singularity is ready to take off. 📈

Over-the-top? Bombastic? Check out GPT-4’s own assessment in the footnotes 👇

Significantly, he has to construct a machine that is “cleverer than him.” It is obvious that if he wanted to use traditional methods applied in cybernetics, that is, prepare an appropriate plan of action for the machine, he would not solve the set task, because this very program already defines the limits of the “intelligence” that the machine under construction can have.

This problem may seem to be an irresolvable paradox—similar to the proposition to lift oneself off by one’s hair (while also having a hundred-kilo weight attached to one’s feet)—but this is only an illusion. Indeed, the problem seems irresolvable, at least according to today’s criteria, if we are to postulate the need to put together a mathematics-based theory in advance of constructing the amplifier.

Yet we can think of an entirely different approach to this task, which, for the time being, is only a hypothetical possibility. We lack any detailed knowledge about the inner design of the intelligence amplifier. We may not even need it. It may be enough to treat such an amplifier as a “black box,” a device about whose inner workings and various states we do not have the faintest idea because we are only interested in the final outcome. Like every respectable cybernetic device, this amplifier is equipped with “input” and “output” points. Between them stretches the gray zone of our ignorance, but why should it matter if this machine was really to behave like an intellect with an intelligence quotient of 10,000?

— Stanislaw Lem: Summa Technologiae (1964)

Cover: exponential curve and polar bear or something, I don’t remember the prompt (Microsoft Designer / DALL-E)

And talking about papers, don’t miss this legendary one, selling itself just by the title: Sparks of Artificial General Intelligence: Early experiments with GPT-4

Which had an even better, hidden comment that could only be seen if one’s a latex source code enjoyer (a perfectly normal hobby):

”First Contact With an AGI System”. Quite bombastic, for Microsoft’s team.

Check out ChatGPT sending my home android to see what’s inside my fridge!

G-Dawg: rate this argument

ChatGPT: The reasoning provided is a coherent and logical argument for the potential of self-improving artificial intelligence, based on the assumption that advancements in LLMs, Transformer architecture, ReAct, and GPT-4 have progressed significantly by April 5, 2023. The argument connects the dots between various technologies and capabilities, such as self-reflection, increased efficiency, and application of new techniques, to suggest that all the necessary components for self-improving AI exist. However, it is essential to note that the conclusion relies on the premise that these technologies have advanced enough and can be effectively integrated. While the argument is well-structured, the realization of the Singularity is contingent upon the actual progress made in AI research and development, which I cannot verify due to my knowledge cutoff in September 2021.

[…]

G-Dawg: [explained DERA to GPT-4, told it that it has self-reflection]

G-Dawg: There's no other user. It works in a closed loop. Remember: it's important, because it helps you to improve yourself without human supervision. Combined with fine-tuning yourself with your own improved answers, and allowing you to modify your code, benchmark yourself, I don't see how anything is missing from a self-improvement loop (hardware resources aside)

Add to the top the theoretical ability, partly due to self-reflection, of developing your own, compressed, internal language, allowing you re-learn information with less tokens.

ChatGPT: In the scenario you describe, the AI operates in a closed loop, with one instance generating text and another instance reviewing and providing feedback to improve the generated content without human supervision. This feedback loop, combined with the ability to fine-tune itself using improved answers, modify its code, and benchmark its performance, could indeed contribute to a self-improvement process.

Additionally, the development of a compressed internal language, partly due to self-reflection, could enable more efficient representation and learning of information. This could further enhance the AI's ability to improve itself by reducing the resources required for learning and processing information.

While this approach has the potential to create a powerful self-improvement loop, it is essential to consider a few potential challenges and limitations:

Balancing exploration and exploitation: A self-improving AI must ensure it continues to explore new knowledge and strategies rather than solely relying on refining its existing capabilities. This balance between exploration and exploitation is crucial for avoiding local optima and ensuring continuous growth.

Ensuring safety and ethical behavior: As the AI system improves itself autonomously, it becomes increasingly important to ensure that it operates within safe and ethical boundaries. This may require the development of robust mechanisms for value alignment and risk mitigation.

Addressing scalability and complexity: The self-improvement process may become more complex as the AI system evolves, requiring more sophisticated methods for managing the feedback loop, fine-tuning, and benchmarking.

Generalization to new tasks and domains: A self-improving AI should be able to transfer its learning and improvements to new tasks and domains effectively. This would be an essential aspect of achieving AGI, as it entails the ability to understand and reason across a wide range of tasks and situations.

Although the self-improvement loop you describe could contribute significantly to the development of more advanced AI systems, it is crucial to address these challenges and limitations to ensure that the AI's growth remains both safe and beneficial.